In this post I will talk about a bottleneck problem handling OVSDB events that we had in the ML2/OVN driver for OpenStack Neutron and how we solved it.

Problem description

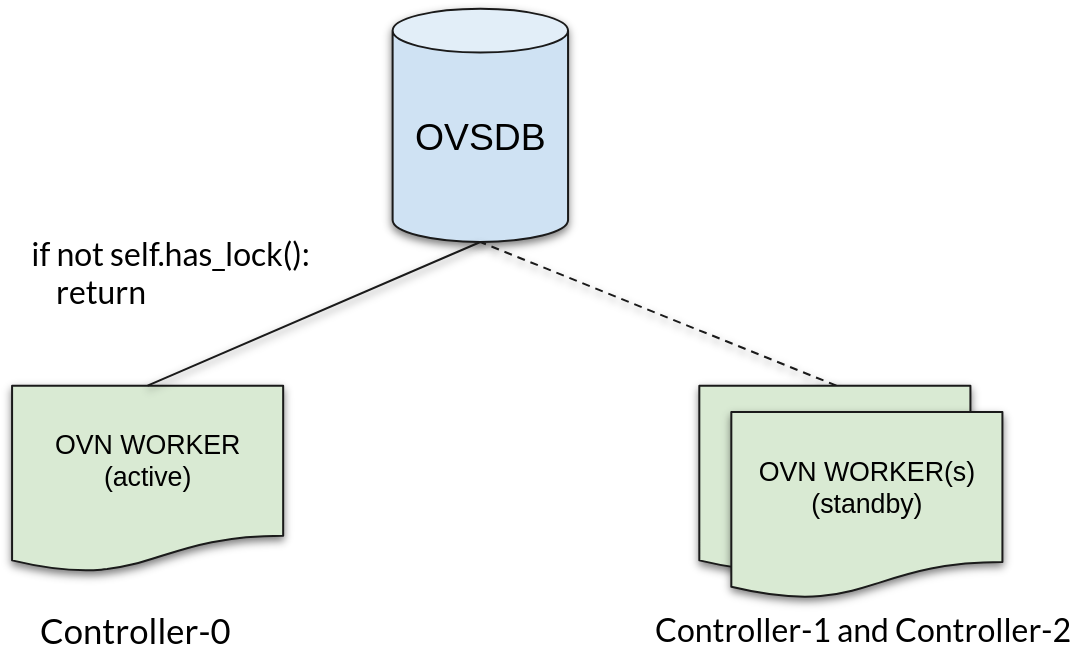

In the ML2/OVN driver, the OVSDB Monitor class is responsible for listening to the OVSDB events and performing certain actions on the them. We use it extensively for various tasks including critical ones such as monitoring for port binding events (in order to notify Neutron or Nova that a port has been bound to a given chassis). This OVSDB Monitor class used a distributed OVSDB lock to ensure that only a single worker was active and handling these events at a time.

This approach, while effective during periods of low activity, became a bottleneck with ports taking a long time to transition to active when the cluster experienced high demand. Also, In the event of a failover, all events would be lost until a new worker became active.

Distributed OVSDB events handler

In order to fix this problem, it was proposed using a Consistent Hash Ring to distribute the load of handling events across multiple workers.

A new table called ovn_hash_ring was created in the Neutron Database where the Neutron Workers capable of handling OVSDB events will be registered. The table contains the following columns:

| Column name | Type | Description |

|---|---|---|

| node_uuid | String | Primary key. The unique identification of a Neutron Worker. |

| hostname | String | The hostname of the machine this Node is running on. |

| created_at | DateTime | The time that the entry was created. For troubleshooting purposes. |

| updated_at | DateTime | The time that the entry was updated. Used as a heartbeat to indicate that the Node is still alive. |

This table is used to form the Consistent Hash Ring. Fortunately, a implementation already exists in a library called tooz for OpenStack. It was contributed by the OpenStack Ironic team which also uses this data structure in order to spread the API request load across multiple Ironic Conductors.

Here's an example of how a Consistent Hash Ring works using tooz:

from tooz import hashring

hring = hashring.HashRing({'worker1', 'worker2', 'worker3'})

# Returns set(['worker3'])

hring[b'event-id-1']

# Returns set(['worker1'])

hring[b'event-id-2']

How OVSDB Monitor will use the new Hash Ring data structure

Every instance of the OVSDB Monitor class will register itself in the database and start listening to the events coming from the OVSDB database. Each OVSDB Monitor instance have a unique ID that is part of the Consistent Hash Ring.

When an event arrives, each OVSDB Monitor instance will hash the event UUID against the hash ring which will return one OVSDB Monitor instance ID. If this ID matches with the instance own ID then that instance will the one that process the event.

In the image above, colors are used to illustrate the change from a one-to-one relationship between Controllers and Workers in ACTIVE-STANDBY mode that we had before to a more scalable configuration with the Hash Ring where each Controller can now host multiple Workers in ACTIVE-ACTIVE mode.

Benchmarking the new changes

To assess the improvements from using the Hash Ring we used a performance analysis tool for OpenStack called Browbeat. We also created a new scenario for Browbeat that would take OpenStack Nova out of the picture and simulates a VM on the available hypervisors by creating a network namespace and attaching a port to the OVS bridge. This enabled us to simulate numerous VMs booting concurrently without requiring the resources to host them all.

In addition to comparing the OVSDB Monitor class with and without the Consistent Hash Ring, we also tested ML2/OVN against ML2/OVS, which was the default driver in OpenStack Neutron at the time.

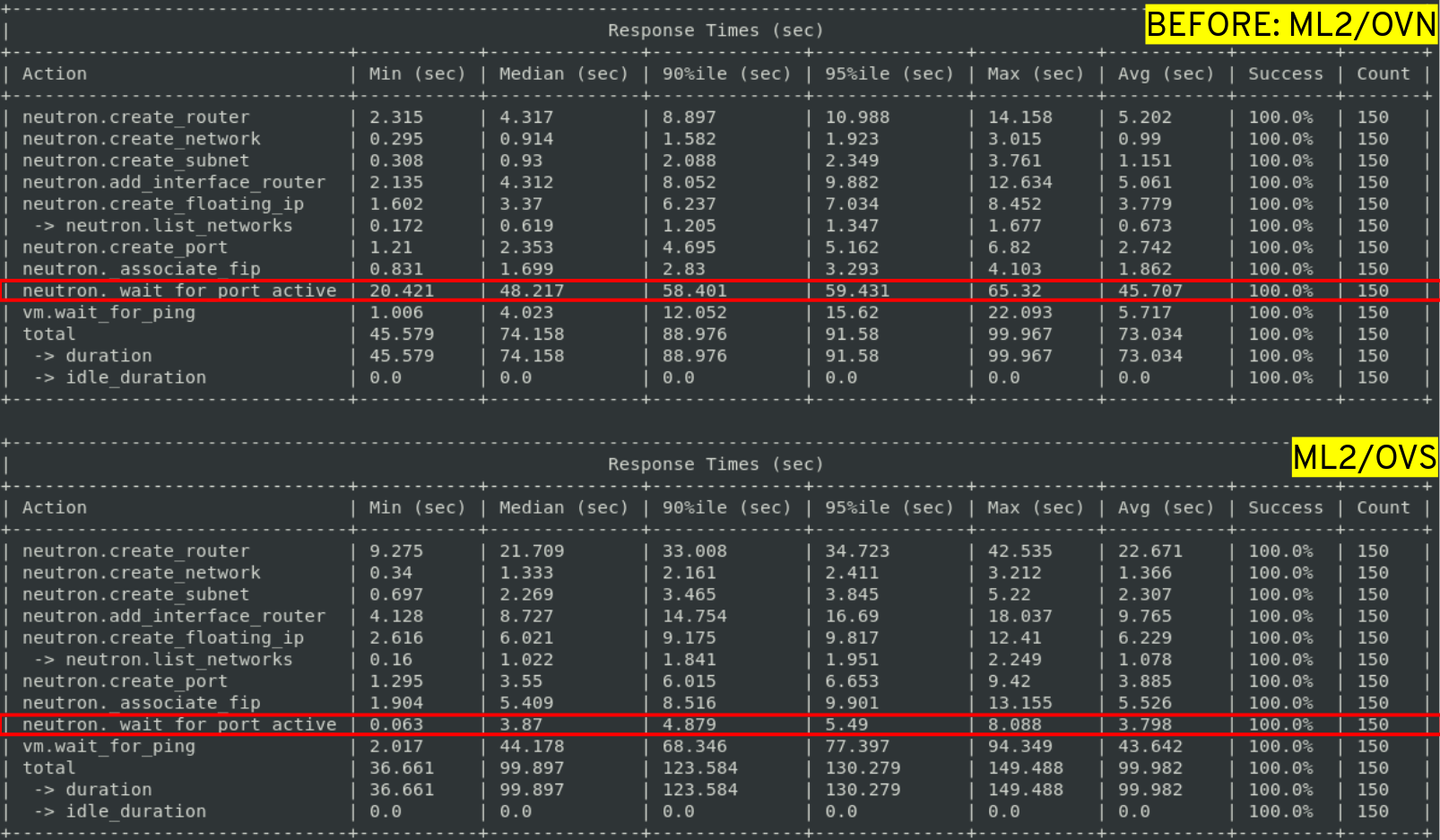

With a concurrency set to 25 and a total of 150 VMs being provisioned, the comparison between ML2/OVN and ML2/OVS BEFORE the introduction of the distributed OVSDB event handler looked like this:

The average wait time for a ML2/OVN port to become active was 45.7 seconds, whereas in ML2/OVS, it was only 3.7 seconds. However, in ML2/OVS, it took an average of 43.6 seconds for a VM to respond to a ping, while in ML2/OVN, it was only 5.7 seconds. Overall, the two drivers were similar, with ML2/OVS being slightly faster.

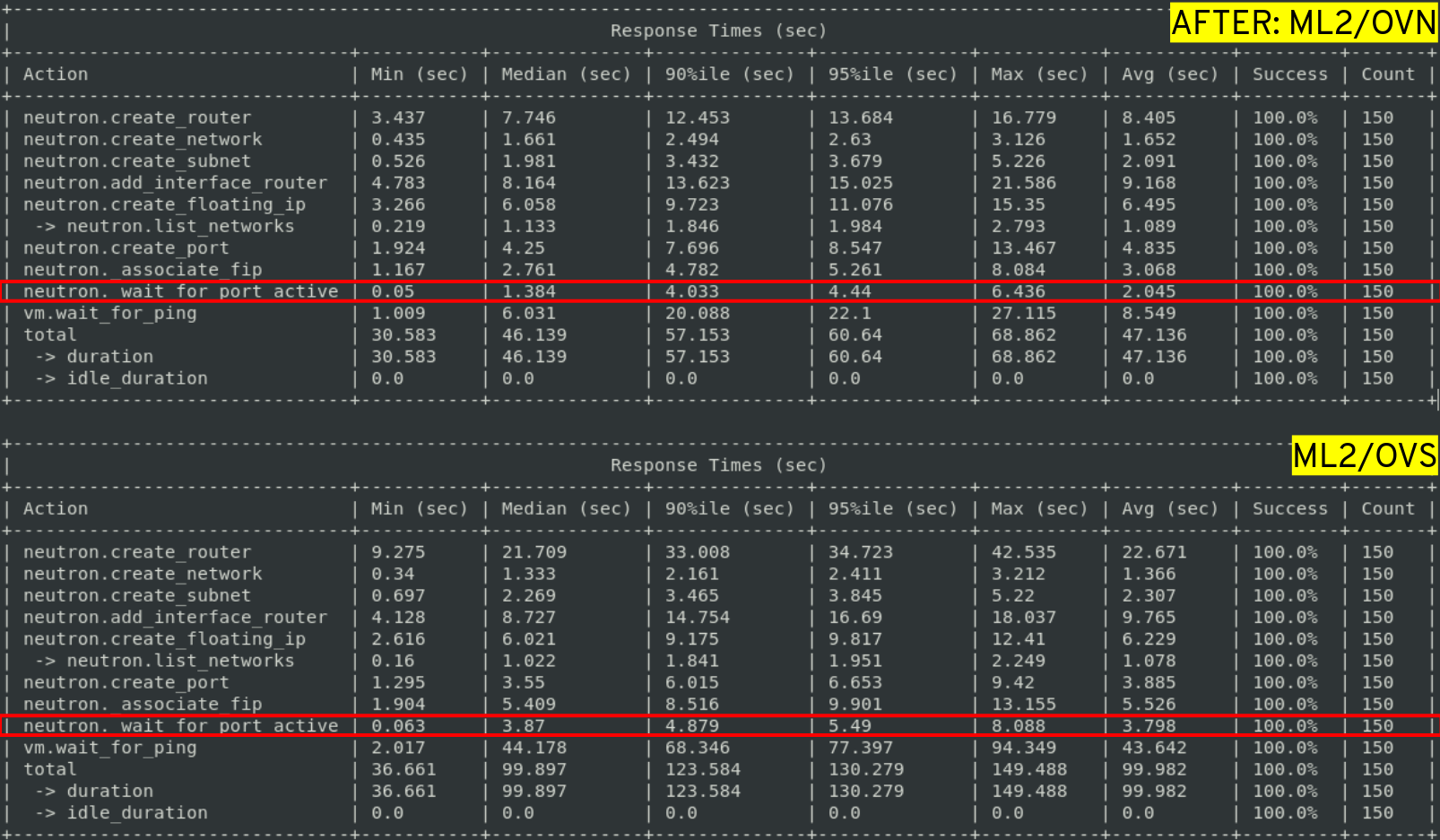

Using the same 25 concurrency and 150 total VMs being provisioned, this is what ML2/OVN in comparison with ML2/OVS looked like with the distributed OVSDB event handler:

The time it took for ports to become active in ML2/OVN dropped to 2 seconds (from an average of 45.7 seconds) and the average time it took for a VM to respond to a ping was 8.5 seconds. That's an impressive improvement, with the distributed event handler a port only takes a fraction (4.4%) of the original time to become active!

Here's is the ML2/OVN numbers side-by-side:

This difference becomes even more apparent when we increase the concurrency to 50 (up from 25). Here’s how the numbers look:

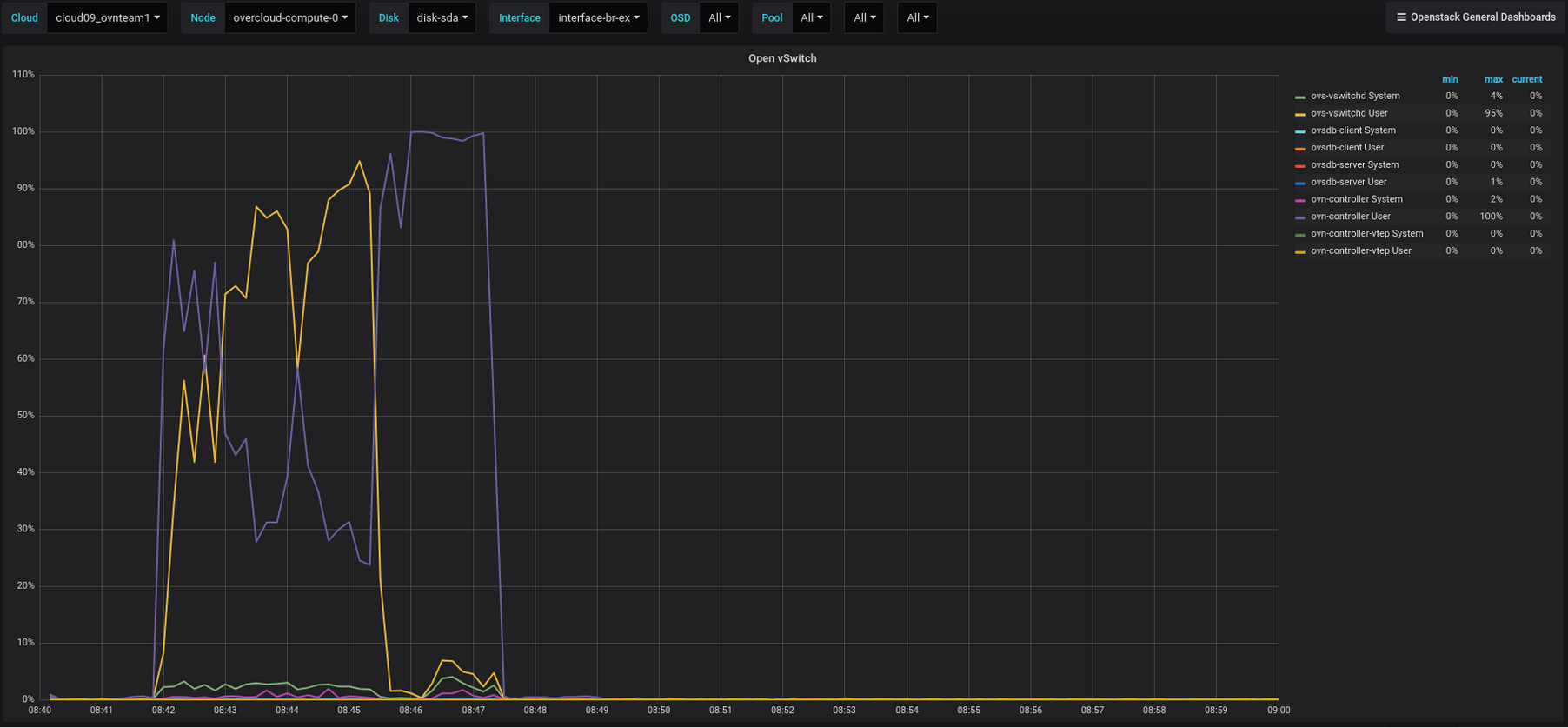

Now the average time for a port to become active dropped from 94.6 seconds to only 3 seconds. Note that it took longer for the port to respond to a ping on average with the new approach, that's because ovn-controller will now install more flows per second, putting more pressure on Open vSwitch daemon (running on the compute nodes) and this is observed through its CPU utilization.

Before, without the distributed event handler:

After, with the distributed event handler:

Final observations

- ovn-controller will now install more flows per second, putting more pressure on Open vSwitch daemon (running on the compute nodes) and this is observed through its CPU utilization.

- Events are now processed in a distributed manner across the cloud. CPU consumption is spread across the controllers, rather than being concentrated on just one.

- At the time this work was done ovn-controller was just a single-threaded loop that calculates everything on each iteration every time some change happens. As events are now processed in parallel by all the Neutron workers, ovn-controller (on the compute nodes) will process more changes per iteration so its overall CPU consumption is also lowered.

- Control plane convergence in ML2/OVN is now significantly faster.

Issues encountered during this work

- Hash Ring race condition during workers initialization

- OVN metadata: Race condition (Metadata service is not ready for port)

- OVN metadata: Leftovers namespaces in the environment

- Core OVN: Incremental processing memory leak and performance issues

- ML2/OVS: DVR FIP traffic not working

References

- The upstream spec file for this work

- Here you can find more details about the implementation that is not part of this blog post. Things such as heartbeating, clean up, etc...

- The two main patches containng the implementation in networking-ovn (this work was prior to ML2/OVN migrate to the main Neutron repository):